{{item.title}}

{{item.text}}

{{item.title}}

{{item.text}}

How big is the opportunity? Our research estimates that AI could contribute $15.7 trillion to the global economy by 2030, as a result of productivity gains and increased consumer demand driven by AI-enhanced products and services. AI solutions are diffusing across industries and impacting everything from customer service and sales to back office automation. AI’s transformative potential continues to be top of mind for business leaders: Our CEO survey finds that 72% of CEOs believe that AI will significantly change the way they do business in the next five years.

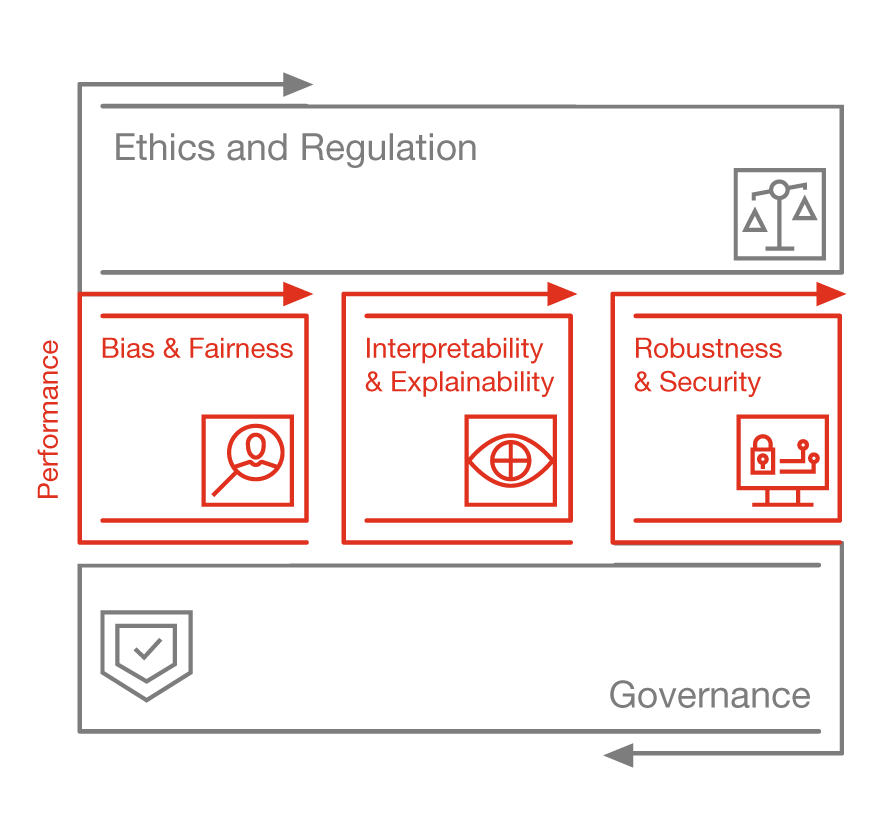

With great potential comes great risk. Are your algorithms making decisions that align with your values? Do customers trust you with their data? How is your brand affected if you can’t explain how AI systems work? It’s critical to anticipate problems and future-proof your systems so that you can fully realise AI’s potential. It’s a responsibility that falls to all of us — board members, CEOs, business unit heads, and AI specialists alike.

Source: PwC US - 2019 AI Predictions

Base: 1,001

Q: What steps will your organisation take in 2019 to develop AI systems that are responsible, that is trustworthy, fair and stable?

AI algorithms that ingest real-world data and preferences as inputs run a risk of learning and imitating our biases and prejudices.

Performance risks include:

For as long as automated systems have existed, humans have tried to circumvent them. This is no different with AI.

Security risks include:

Similar to any other technology, AI should have organisation-wide oversight with clearly-identified risks and controls.

Control risks include:

The widespread adoption of automation across all areas of the economy may impact jobs and shift demand to different skills.

Economic risks include:

The widespread adoption of complex and autonomous AI systems could result in “echo-chambers” developing between machines, and have broader impacts on human-human interaction.

Societal risks include:

AI solutions are designed with specific objectives in mind which may compete with overarching organisational and societal values within which they operate.

Ethical risks include:

Your stakeholders, including board members, customers, and regulators, will have many questions about your organisation's use of AI and data, from how it’s developed to how it’s governed. You not only need to be ready to provide the answers, you must also demonstrate ongoing governance and regulatory compliance.

Our Responsible AI Toolkit is a suite of customisable frameworks, tools and processes designed to help you harness the power of AI in an ethical and responsible manner - from strategy through to execution. With the Responsible AI toolkit, we’ll tailor our solutions to address your organisation’s unique business requirements and AI maturity.

Find out by taking our free Responsible AI Diagnostic—drilling down into questions like:

Whether you're just getting started or are getting ready to scale, Responsible AI can help. Drawing on our proven capability in AI innovation and deep global business expertise, we'll assess your end-to-end needs, and design a solution to help you address your unique risks and challenges.

Contact us today. Learn more about how to become an industry leader in the responsible use of AI.